Orbital Inspectors

If the space station gets smacked by a micrometeoroid, an array of devices can find—and fix—the damage.

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/filer/90/cb/90cb21e8-39d0-4887-9a9f-426bb0a45544/07h_dj2015_inspector_via_bruce_live.jpg)

The piece of orbital junk closed in on the International Space Station at 29,000 mph. Six crew members evacuated to two Soyuz space capsules that would be their lifeboats if the debris made contact. The astronauts had no tools designed to find and repair significant damage and had only one option: Undock, and abandon the $100 billion Earth-orbiting laboratory. At 8:08 a.m. on June 28, 2011, the object and the station flew past each other—a harrowing 1,100 feet apart at closest approach.

That near-collision was the second time in two years a station crew had to seek shelter in the capsules. Nineteen other times between 1999 and August 2014, NASA has performed “debris avoidance maneuvers”—moving the entire station out of the way of incoming objects, which can be undertaken only when ground controllers detect the debris early enough. And then engineers must weigh the probability that the station will be hit against the potential impact of the maneuver on external station research and predict any possible danger the maneuver poses to the crew.

The risk associated with micrometeoroids and orbital debris is not trivial. Impacts happen regularly, although the station has so far been spared a major hit. Engineers and safety officers at NASA have given a lot of thought to the tools that a station crew could use to respond to a significant collision. The first solution is simply being able to inspect the exterior of the station for damage. Astronauts’ inability to adequately survey their spacecraft has been a problem ever since one of Apollo 13’s oxygen tanks exploded on the way to the moon and, more recently, when the space shuttle Columbia burned up on reentry because of an undetected breach in the leading edge of a wing.

“We should never allow an Apollo 13 or a Columbia to happen again, where we choose not to have an ability to see the exterior of the spacecraft,” says George Studor, a former senior project engineer at Johnson Space Center in Houston. Studor specializes in spacecraft inspection technologies, and now provides consulting for NASA contractors.

The goal now is to get real-time observation in as many places in and around the space station as possible. The range of technology that NASA and its partners are working on is broad—from high-definition external cameras to autonomous robots that can fly around or crawl on the outside of the station to investigate and repair damage.

Studor has been involved in the in-space inspection effort for decades. He joined NASA’s space shuttle program in 1983, as a U.S. Air Force pilot detailed to the agency to improve the shuttle turnaround in between flights. After the Columbia accident, he worked on impact detectors inside the shuttle’s wings. “Either you design [the spacecraft] for inspection and access, or you provide sensors that help you do the inspection,” he says.

The space station falls into the second category. It’s a huge, complex craft, designed and constructed over decades by numerous manufacturers from different countries. With everything that had to be done just to get its modules built, launched, and assembled, easy access for inspection was not high on the priority list, so many parts of the station are not visible through the windows or even by the exterior cameras, says Studor.

In the station’s orbit is a vast field of debris. Objects range from abandoned upper launch stages, to fragments of satellites that have exploded or collided—the main source of space trash—to tiny pieces, such as flecks of chipped-off paint. (Impacts from paint chips caused enough damage to require replacing several space shuttle windows.) All are dangerous because all are traveling at enormous speed—17,500 mph on average.

The U.S. Space Surveillance Network, part of the Department of Defense, routinely tracks more than 21,000 pieces of orbital debris larger than a softball. NASA says there are 500,000 pieces in orbit larger than a marble, and millions more that are so small they are impossible to detect and track.

The orbital trash problem began to balloon in 2007, when China intentionally blew up one of its old weather satellites in an anti-satellite weapons test, creating about 3,000 pieces of space junk. Two years later, a defunct Russian satellite collided with a U.S. commercial satellite, adding 2,000 more pieces. Of all the larger debris known and catalogued, NASA estimates that more than 800 objects routinely fly in the station’s orbit, according to an assessment last October; that’s a 60 percent increase over the last 15 years.

It’s impossible to know how often the space station is hit by small debris, but in 1984, NASA launched the Long Duration Exposure Facility to measure impact rates on spacecraft in low Earth orbit. Over nearly six years, the city-bus-size spacecraft was pelted by orbital debris and micrometeorites nearly 20,000 times—more than 3,000 times a year. This was decades before the debris field reached the density it has today. But “it is not how many hits we get, it’s what they hit, what they are made of, and what we do to take that risk into account,” says Studor. “It is the risk to the reentering spacecraft with crew in them that worries us the most.” The Soyuz capsule—the one astronauts would use as an escape should they have to flee the station—stays docked for six-month intervals in a position where it is unshielded by any part of the station. “We have counted a couple dozen of what appear to be impacts to the Soyuz thermal blankets that happened in less than six months,” he says.

Because NASA can’t always protect the station and the docked spacecraft from debris, the agency is seeking ways to find the damage from significant incidents and to patch the holes as fast as possible. The first order of business is to swap older cameras mounted around the outside of the station with Nikon D4s that can zoom, pan, and tilt, and can be controlled from the ground, significantly reducing the number of blind spots on the exterior. The Nikons, which have top-of-the-line sensors that capture significant detail even in extremely low light, will be installed during three spacewalks in 2015.

In addition to mounted or hand-held cameras, what if astronauts could send one floating out wherever they needed it to go? Currently on the station are prototypes of small free-flying vehicles called SPHERES—Synchronized Position Hold, Engage, Reorient, Experimental Satellites, a joint project of NASA, the Defense Advanced Research Projects Agency, and the Massachusetts Institute of Technology. (The project originated as a challenge by an MIT professor to his students to design something similar to the light saber training droid in a Star Wars prequel.) Astronauts have been testing the bowling-ball-size satellites inside the station since 2006. With self-contained power, propulsion, computers, and navigation equipment, a vehicle like SPHERES could autonomously monitor systems on board and conduct inspections, maintenance, and repairs. In October, NASA announced a plan to send a Free Flying Robot—an advanced version of SPHERES—to the station in 2017.

David Hinkley is an engineer with The Aerospace Corporation, which is developing small satellites, similar to SPHERES, called AeroCubes. “If the inspector was constantly flying around and imaging, and if it was autonomous, then the station crew could ignore it,” he says. “The Houston ground crew, which is much, much larger, could process the images and task the inspector directly.” A free-flying inspector for the station needs the ability to constantly communicate with controllers and image-recognition technology so it knows exactly where it is, Hinkley says, and that technology is not quite ready yet. Furthermore, he believes that station managers are not quite ready to let loose free-fliers yet, afraid of “unintended damage or other mischief” they might get in to.

When it comes to making repairs, the more detail engineers can get before an astronaut is sent out on a spacewalk to fix it, the better—that’s where three-dimensional imaging comes in. A Florida company called Photon X has developed a sensor that extracts 3D information from a single still image; it’s generally used for tasks like facial recognition and, soon, in some medical exams. Once a 3D camera is certified for spaceflight, it will be an essential inspection tool.

Cameras can inspect damage, but it’s unlikely they will catch the impact as it happens. So to monitor for leaks in the station walls, ultrasonic and piezoelectric (pressure-detecting) sensors will listen for specific frequencies or measure changes in the station’s internal atmosphere. NASA is testing a prototype system that uses eight to 12 sensors, Studor says, which are being calibrated to the normal background noise the station generates all the time.

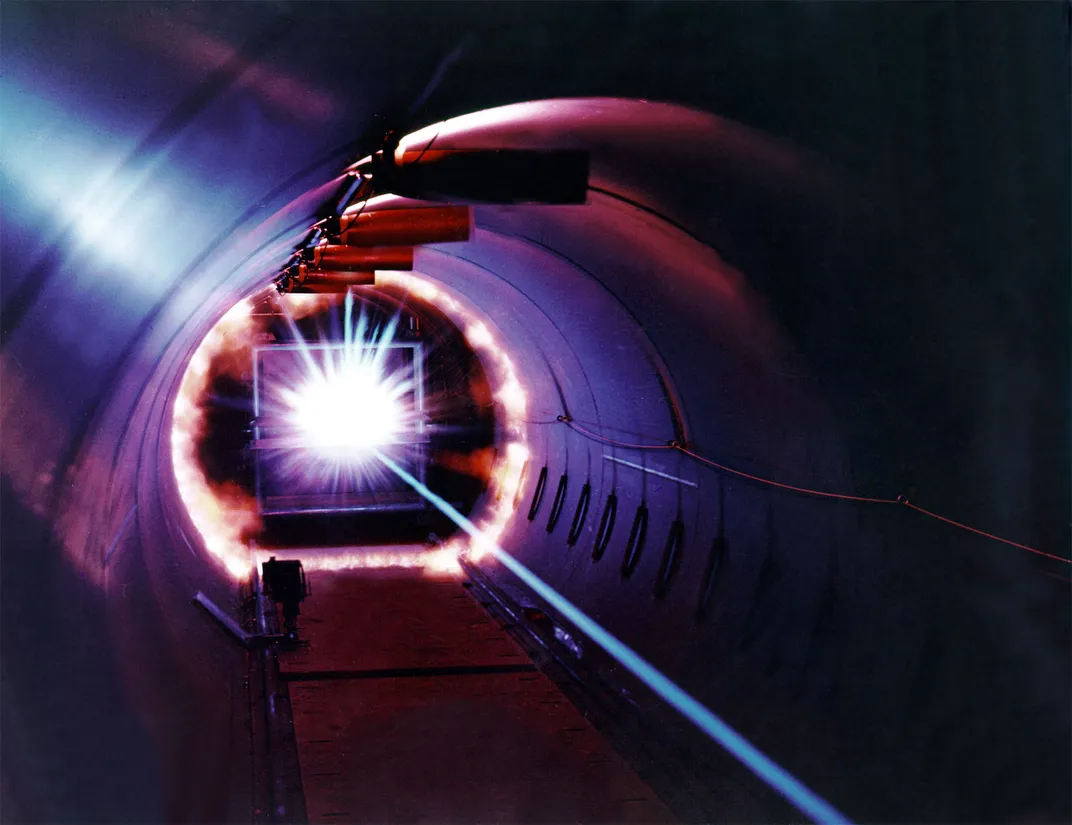

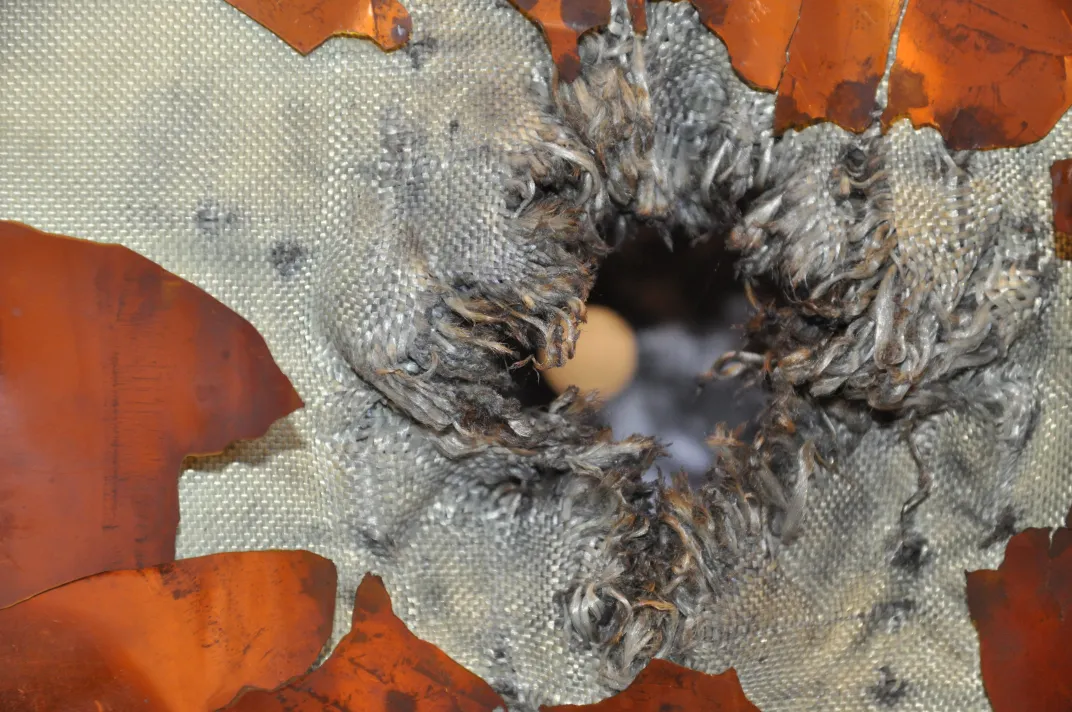

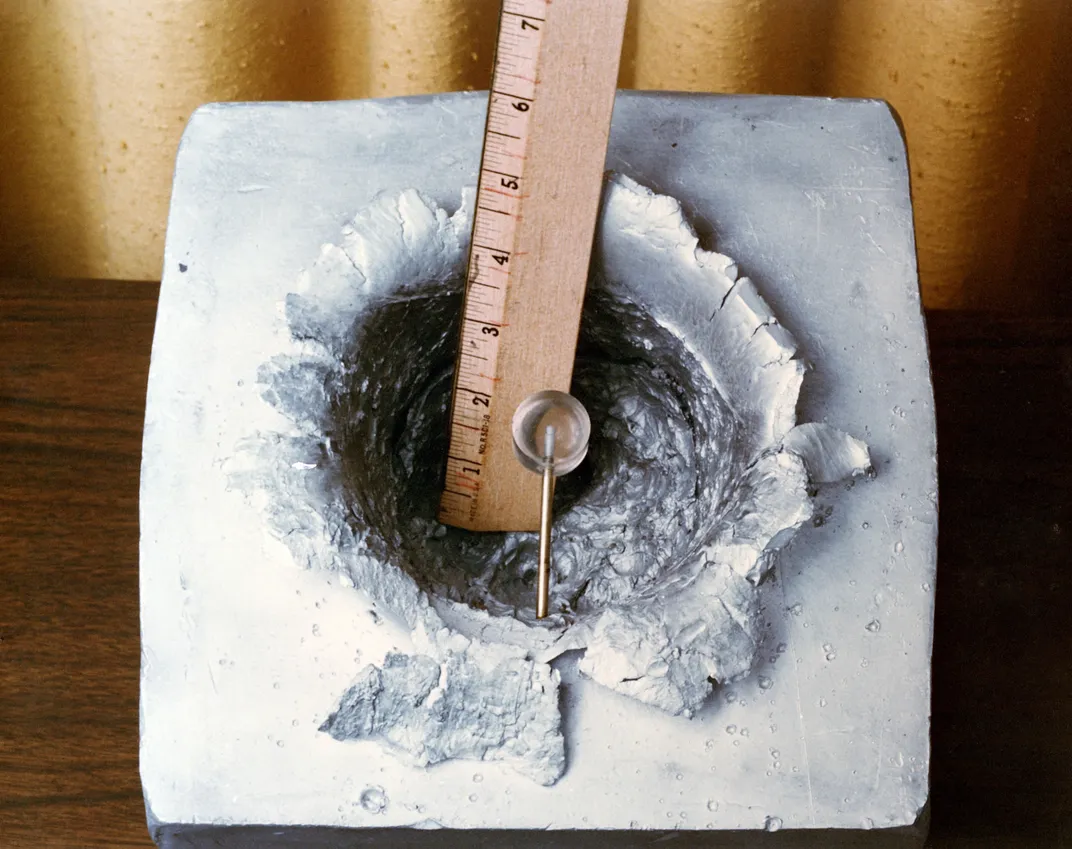

Detecting a breach immediately is critical. The station’s heavy shielding includes many areas covered in multiple layers of aluminum called Whipple shields, as well as Nextel and Kevlar fabric blankets. These are designed to absorb at least some of the energy from an impact, but if the object is large enough, it can penetrate the layers and ignite a fire when it hits the atmosphere inside and vaporizes into hot plasma.

Finding a leak from the inside is difficult because of the phone-booth-size racks of equipment that line many of the station walls. Moving them to search for damage would be cumbersome and time-consuming. “You could be looking behind this rack and that rack, and you’ve lost 30 minutes, and then you find out that [the leak] is behind a rack that takes an hour and a half to get behind because of all the connections,” Studor says. “Meanwhile, we know when the astronauts are going to get hypoxic, and they have to get out of there.” A well-distributed sensor system would give crew members a better chance of triangulating the leak’s location.

Once the damage is detected, assessing it requires an entirely different set of inspection technologies, Studor says. Even when impacts don’t penetrate the station’s hull, they can create pockmarks with sharp edges that can tear an astronaut’s spacesuit during a spacewalk. An actual breach can create cracks that propagate and peel back layers of sharp aluminum around the exit wound. Crew members need to see images of these hazards, as well as assess exactly where the damage has occurred relative to load-bearing parts of the wall.

NASA envisions sending out controllable snake-like robots—essentially hoses that can be manipulated remotely. The snakes would have 3D cameras at their tips and slither into areas of the station where humans can’t go. The idea has been in development for more than a decade: enabling flexibility and dexterity using artificial-muscle technology based on electroactive polymers, which can change shape or size when stimulated by an electric field. But this technology has been difficult to harness, says Yoseph Bar-Cohen, a senior research scientist at the Jet Propulsion Laboratory in Pasadena, California. “Unless they are sizable, you cannot get significant force from these materials,” he says. In 2005, Bar-Cohen staged the first of a series of arm wrestling matches at JPL between humans and a robot arm with electroactive polymers. The robot arms, at least so far, don’t have a chance against their human opponents. “Once we have a winning arm, we know we have it made,” Bar-Cohen says. “It may take a long time.”

Outside the station, assessing damage from an impact presents its own challenges. If the astronauts are sent on a spacewalk to inspect it, they’ll need novel tools. In many cases, Studor says, the damage could be obscured by the very shielding meant to keep it at a minimum. The entry wound might be barely visible. So astronauts may someday use a hand-held backscatter X-ray imager that is based on technology similar to that in airport security detectors. Such imagers would allow astronauts to search for holes by peering into the layers of metal and synthetic fabric shielding. The challenge is making a unit small enough for an astronaut to carry and much more energy-efficient than they are now. It’s much more likely that astronauts would first use a hand-held, camera-tipped borescope, which they could stick down a hole past exterior shielding, he says.

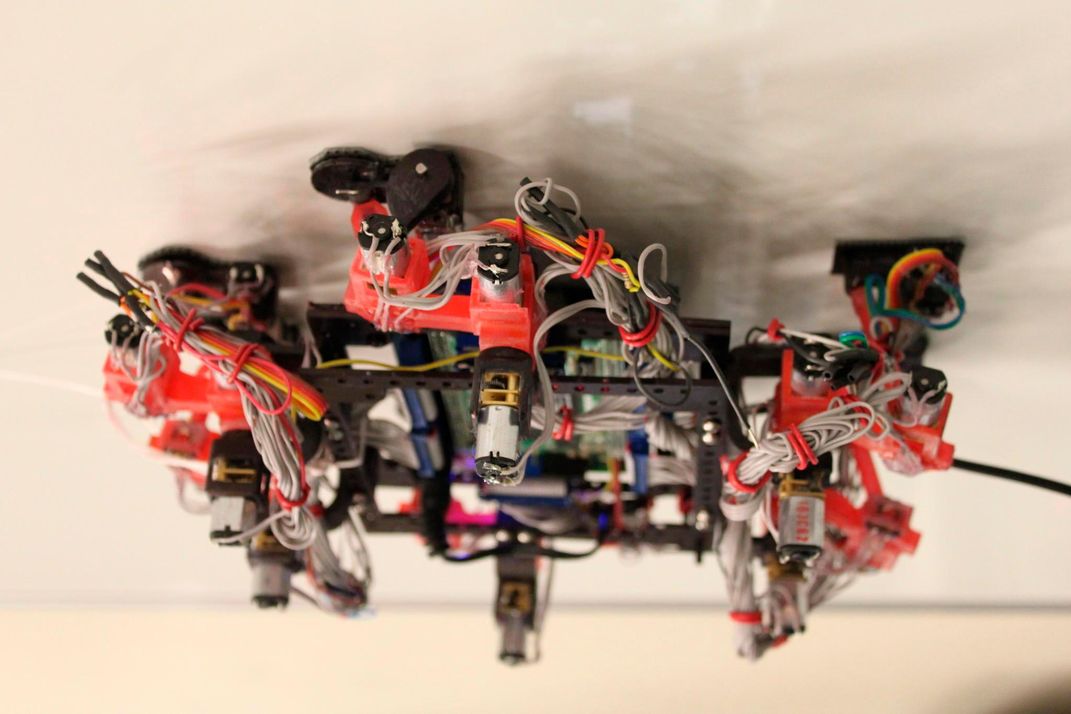

Sending an astronaut outside is always risky, however. That’s why crawling robots—probably the ultimate in spacecraft inspection technology—may find a place on a future space station crew.

Building a robot that can crawl on the outside of a spacecraft in zero gravity without floating away is no simple challenge. One solution, under development at JPL, mimics the anatomy of a gecko, one of nature’s best climbers. The gecko’s sticky feet are marvels of evolution, allowing the animal to cling to walls and seemingly defy gravity. Biologists discovered that microscopic bristles on gecko toes are composed of hundreds and sometimes thousands of even smaller hairs called spatulae. The tiny toe hairs are only about 200 nanometers across (0.2 percent of the width of a human hair), so they interact with the gecko’s traveling surface on a molecular level, through van der Waals forces, a net attractive force that is generated between molecular electron clouds.

JPL engineer Aaron Parness, who became interested in robotics while an undergraduate, began studying these forces in graduate school at Stanford. Wanting to build climbing robots, he and his classmates partnered with biologists at the University of California at Berkeley to study the gecko. For his doctoral project, Parness designed and fabricated synthetic gecko feet.

In 2010 Parness came to JPL, where he and his colleagues have developed synthetic climbers made of a silicon rubber material. The tiny polymer hairs measure 20 microns in diameter—bigger than the gecko’s spatulae but still capable of generating van der Waals forces. The stickiness of the silicon rubber “is based on the geometry of the hairs,” Parness says. “It’s not based on temperature or pressure—the things that would trip you up in space. These van der Waals forces are not sensitive to that.”

A conventional robot crawling along the outside of the station would be confronted with a challenge astronauts know all too well. If you put pressure on a surface to make contact, you push yourself away. But a robot equipped with polymer pads that mimic the adhesiveness of the gecko’s feet should be able to grip whatever it comes in contact with, Parness says.

These robot crawlers could be parked on the outside of the station until they’re called into action to survey damage. That would reduce unnecessary airlock usage.

Parness says that in the short term, spacewalking astronauts could use the technology in a hand-held tool, something the size of a small notebook with a handle on one side and gripper pads on the other. “You could…pull a trigger to get it to stick, and then pull the trigger again to un-stick it and move it to a new spot,” he says.

Parness and his colleagues hope to test a gecko-padded robot prototype on the station in 2016. Today, they’re working on a robot with multiple pairs of pads that can crawl along uneven surfaces and still maintain its grip. An operation like the replacement of a failed cooling pump on the station last December, which required two spacewalks and several hours of dangerous work, may someday be handled by a smart, Spider-Man-like robot, says Parness.

Technologies developed to inspect and repair the space station could also be useful for any of the more than 1,000 active satellites in orbit. In 2011, NASA affixed the Robotic Refueling Module to the exterior of the station and is currently working on the second phase: testing its inspection tool, VIPIR, for Visual Inspection Poseable Invertebrate Robot. Groups like DARPA and ATK are also working on the problem; representatives from both were at a conference last August on the future of on-orbit servicing, and DARPA officials there said they hope to get their Phoenix satellite inspect-and-repair project working by 2019.

Developing any of the inspection and repair technologies will be costly, and the research may be funded by private industry—but not necessarily the private space industry. The petroleum and nuclear power industries, for example, have a great need for robotic vehicles and imagers that can withstand extreme environments, whether underground or at the bottom of the ocean or in a place filled with radiation.

“NASA doesn’t have enough money to do it itself, and other industries want the same kind of thing,” Studor says. “Let’s show [industry] that there’s a path to get what they want if they invest in this technology.”

Whether the funds are from private or public organizations, it would be wise to increase investments in these technologies now so that if—or more likely, when—a piece of orbital debris comes along one day and punches through a space station wall, the astronauts can deal with it swiftly instead of abandoning ship.

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/accounts/headshot/Bruce_Lieberman_Yosemite_Cropped.jpg.jpeg)

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/accounts/headshot/Bruce_Lieberman_Yosemite_Cropped.jpg.jpeg)