Almost Like Being There

Telepresence and VR may be the smart way to explore the Martian surface—and the only way to go farther.

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/filer/ae/a7/aea79f42-3453-416b-8e58-7e7742cd8c11/05h_jj2015_fpo_hololensmars20150121-16_agaig-web-resize.jpg)

To appreciate what virtual reality could bring to space exploration, you first have to face reality. So here it is, straight up: We won’t be landing astronauts on Mars anytime soon—not in 10 years, not in 20, maybe not in 50, given the current inertia in U.S. space policy. It’s a stretch even to say NASA is working on such a mission, considering that most of the required hardware is still in the conceptual stage, and that one advisory group after another has told the space agency, in ever blunter terms, that it can’t afford a Mars expedition with its current budget. Here’s the NASA inspector general, last February: “It is unlikely that NASA would be able to conduct any manned [Mars] surface exploration missions until the late 2030s at the earliest.” And that’s only if engineers start work on the lander, habitat, and other surface systems immediately—and they can’t, because NASA doesn’t have the money.

Now consider an alternative to astronauts landing on Mars that might be nearly as good—maybe, in the long run, better: astronauts in Mars orbit, operating sophisticated robots and rovers on the planet’s surface, using telepresence to see through the robots’ eyes and feel what they touch.

Telepresence, a currently available (and constantly improving) set of technologies that lets users interact directly, in near-real time, with a remote environment, is one way to explore Mars without having to go there. Eventually, there will be another way: virtual reality, a technology now in its infancy that lets users immerse themselves in imagery or other kinds of data. The ultimate promise of VR is a multi-sensory simulation so convincing that it’s hard to tell it from the real thing.

Last March, the Planetary Society, the most influential U.S. space advocacy group, lent its support to a strategy, developed at NASA’s Jet Propulsion Laboratory in California, that would put astronauts in Mars orbit around 2033, where they would spend up to a year tele-operating robots on the Martian surface. Driving rovers from close range would avoid the 20-minute signal delays now experienced by NASA’s rover operators on Earth. The first Mars landing (a quick, 24-day campout) would slip to 2039, later than NASA has advertised. A full-up expedition wouldn’t happen until 2043.

The main advantage of this strategy is savings, according to Scott Hubbard of Stanford University, who led a Planetary Society workshop to consider the plan, and who used to head NASA’s Mars program. But an orbital mission would also “enable scientific exploration of Mars and its moons while developing essential experience in human travel from Earth to the Mars system,” according to the workshop report.

NASA hasn’t officially endorsed the idea, but appears interested. Richard Zurek, JPL’s chief scientist for Mars exploration, said in a reddit online forum in September, “Presently, NASA is looking into the possibility of sending humans to the vicinity of Mars in the early 2030s. In this scenario, the earliest humans to the surface would be in the late 2030s.” Other agency officials have made similar statements recently, pointedly talking about journeys to Mars, rather than landings on Mars, in the 2030s.

For some, this sounds like a retreat: Why send people all those millions of miles, only to stop just short of the goal?

The obvious answers are money and safety—not landing would reduce some of the risk of a Mars voyage. But there’s another reason. Recent findings about present-day water on Mars have heightened awareness of a long-looming “planetary protection” dilemma. Any place where water might be present could also harbor Martian microbes, if they exist. A Mars lander going to one of these potentially watery regions would likely have to be sterilized to avoid introducing terrestrial microbes that might harm native Martian life, or confuse instruments built to detect it (which is, after all, our main interest in Mars). A rover can be sterilized for a few tens of millions of dollars. But a crew of astronauts, with all their attendant biota? Keeping them clean is a daunting problem. Unless we find a solution, Mars explorers, even if they land on the surface, could be barred from stepping onto any zone that might harbor life, in which case they’ll have no choice but to tele-operate rovers to do the everyday work of surveying and sampling.

These roadblocks to human Mars exploration may be what motivate NASA to embrace telepresence in the near-term. But seeing it as a backup plan, or a poor substitute for real exploration, misses the point, according to Dan Lester, a University of Texas astronomer who has been among the most vocal proponents of telepresence.

Lester and others believe space exploration in 2040 will be very different from what it was in the Apollo era, in large part due to technologies emerging today in fields from robotics to entertainment. Capabilities that seemed like science fiction in 1969 are about to go mainstream: In the next few months the first consumer-friendly virtual reality headsets will hit the market. “Space telepresence isn’t based on technology that will be developed by NASA,” says Lester. “It’s not rocket science. It’s real-world technology that will be pushed from many commercial directions.” What’s required of NASA is a culture shift, a rethinking of how we explore, and how we interact with robots. “Getting boots dirty in gravity wells is a very expensive proposition,” says Lester. Using robotic eyes and limbs as proxies for our own while our brains stay behind, he believes, we could visit more places for less money. People talk about the need for human-robot cooperation, Lester says, “without really understanding what that cooperation might mean. It could mean an R2D2 following obediently behind an astronaut. Or it could mean telepresence.”

David Mindell has seen a similar culture shift in the world of undersea exploration. A professor of aeronautics and astronautics at MIT, he also has years of engineering experience with robotic underwater vehicles. In his new book, Our Robots, Ourselves, he describes the battles between oceanographers who insisted on the need to explore the deep ocean in person, inside pressurized vehicles, and those who found that remotely operated vehicles (ROVs) could give the same experience—or often a better one—from the comfort of surface ships or even their labs. By and large, the ROVs won. And it’s still people doing the exploring. “All we have done is changed the place where the people are when they do this work,” Mindell writes.

**********

Knute Brekke is talking to me by phone from a ship in the Gulf of California, where he’s on a research voyage for the Monterey Bay Aquarium Research Institute. As the chief pilot for the ROV Doc Ricketts (named for the marine biologist in Steinbeck’s Cannery Row), he leads a small team of pilots who take turns driving the two-armed undersea robot as it surveys the ocean floor, pausing occasionally to examine a rock formation or capture a delicate sea creature using a suction device held by one arm. The pilots remain safely on the surface, while Doc Ricketts cruises past clam beds more than a mile below.

While Brekke and I chat, he’s watching another ROV pilot drive a “push core” into the mud to take a sample. The pilot holds a controller that slaves the robot arm to his, with force sensors that let him feel when he’s pushed too hard. This is hands-on fieldwork, except that the hands are robotic.

On giant, high-definition monitors, the pilots and scientists watch video from several of Doc Ricketts’ cameras. They can call up any view and use either arm (which can themselves be switched out, depending on the job), plus a whole toolbox of attachments to perform practically any task they might want to if they were there in person. “I can’t think of anything we have ever attempted to do that we haven’t been able to do,” says Brekke. This is the way most underwater work is done in 2015.

Sending robots to the deep ocean is similar in many ways to sending them into space. (David Akin, a University of Maryland roboticist who has designed machines for both, thinks space is easier.) But there are important differences, including the critical matter of latency, or signal delay. Oceangoing ROVs are connected to surface ships by high-speed fiber optic cables, which supply all the data and video the pilot needs, instantaneously. NASA’s objects of study are typically millions of miles away, and even the fastest data link can’t beat the speed of light. A radio command from Earth takes between four and 24 minutes to reach Mars, depending on where the two planets are in their orbits, and the news that your robo-arm just hit a rock takes the same length of time to reach you back on Earth. That’s why most tele-operation scenarios would put the robots and operators much closer together.

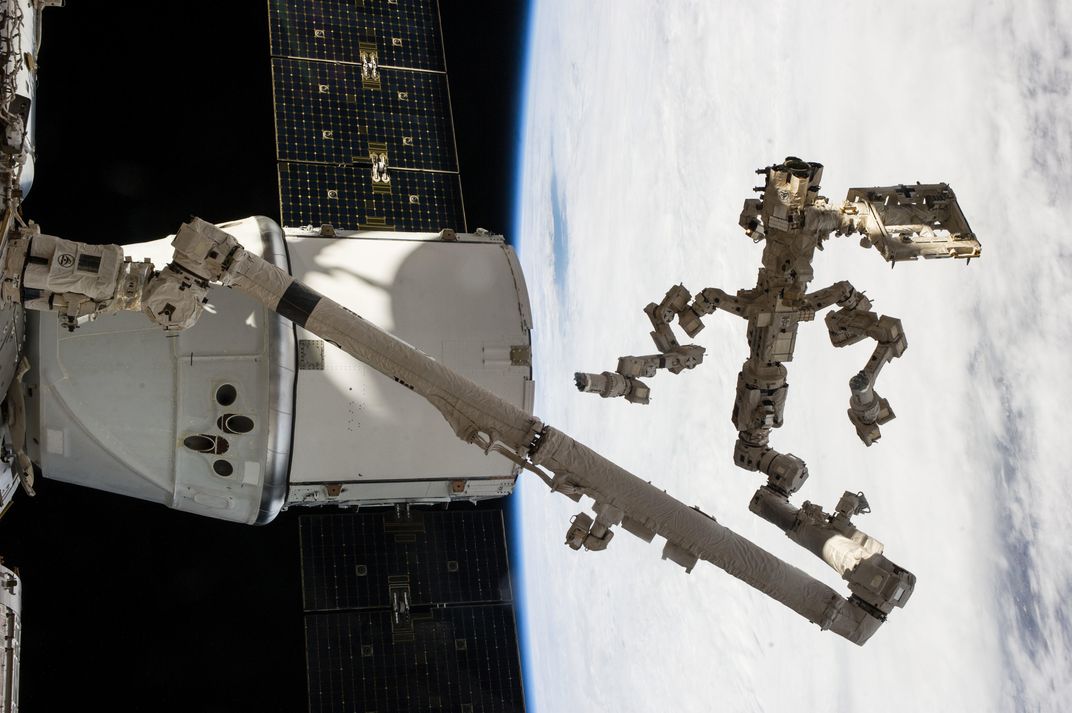

Perhaps the best current example of a tele-operated space robot is the vaguely humanoid, two-armed special-purpose dexterous manipulator (SPDM), also known as Dextre, which rides on the end of the International Space Station’s 58-foot-long manipulator arm. Dextre was originally meant to be operated by astronauts from inside the station. But shortly after it was installed, in 2008, NASA decided that tele-operating the robot from the ground would relieve some of the astronauts’ workload.

In 2011 controllers at NASA’s Johnson Space Center in Houston used Dextre for its first real job—unpacking supplies from a cargo ship while the astronauts on the station slept. There have been many jobs since, and next year the robot will take a hardware handoff from a spacewalking astronaut for the first time. In the conservative, safety-minded culture of NASA, that signals a vote of confidence in the system. In effect, it allows Dextre’s operators in Houston to be telepresent outside the station, lending a pair of mechanical hands to help the busy crew.

And those hands are growing in capability. In 2004, when Dextre was still in development, NASA had to decide who should get the job of servicing the Hubble Space Telescope for the last time: the astronauts or Dextre. The robot was deemed unready for the challenge. The verdict might go differently today. Last spring Dextre got in some practice for a delicate fuel transfer in space planned for 2017—a job that requires millimeter-scale vision and dexterity, and which no astronaut has ever performed. If successful, it could point the way to a profitable business using dexterous, tele-operated robots to refuel satellites in orbit.

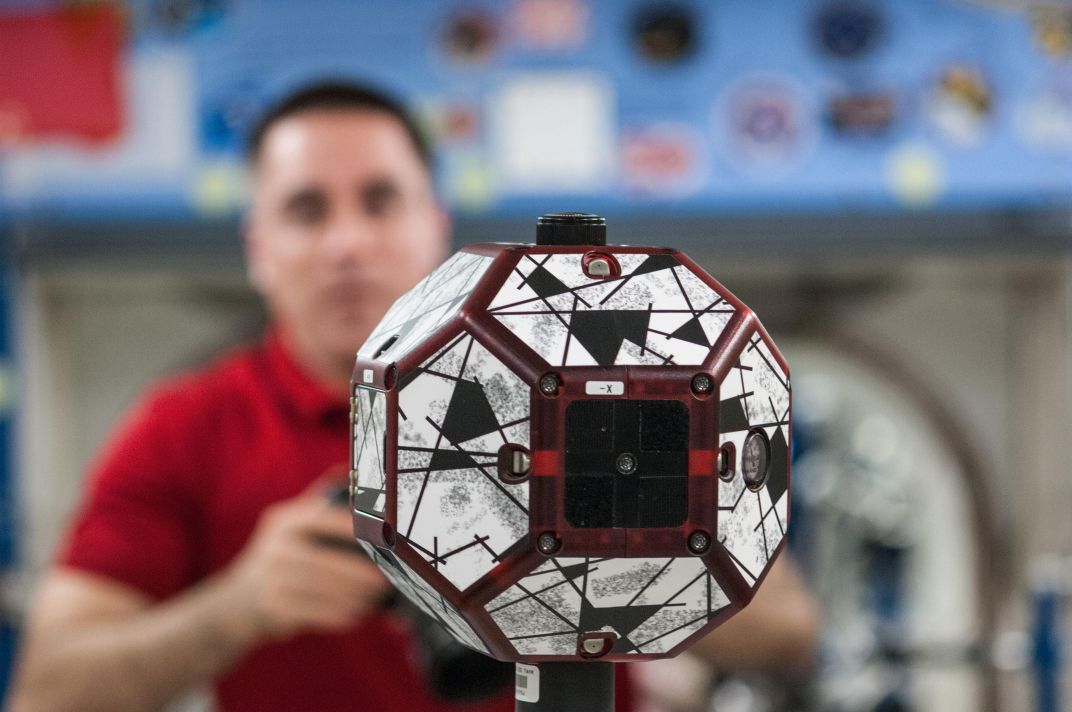

Just as Dextre has taken on some jobs outside the station, Robonaut is being groomed for inside work. NASA’s photogenic humanoid robot arrived on the station in 2011, and since then has demonstrated increasingly more complex skills in different operational modes, from autonomous to ground-controlled to tele-operated by the station astronauts, who wear glasses and gloves that enable them to see through Robonaut’s eyes and control what the robot touches. So far it’s been all research—NASA is cautious about letting a large, bulky robot move around a cabin full of astronauts on its own—but the plan is for Robonaut to eventually take on routine tasks it has already demonstrated, from cleaning handrails to changing air filters. Some of that work will be autonomous, but much will be supervised from the ground.

Experience gained with tele-operating robots on the station will be invaluable when NASA starts sending robotic landers to the moon in the coming decade. The Resource Prospector, a rover tentatively planned for launch in 2020 to survey water ice at the lunar poles and demonstrate how it could be mined, will be tele-operated from Earth. The mission plans to use software called VERVE (Visual Environment for Remote and Virtual Exploration), which overlays the rover’s path on a 3D map of the site created from existing lunar imagery. The driver can then select a new “waypoint” destination for the rover on the map. Live camera feeds show obstacles to avoid.

Daniel Andrews of NASA’s Ames Research Center, project manager for Resource Prospector, says he was “terribly impressed by this very visually intuitive tool” during recent field trials, in which operators at Ames drove a prototype of the rover in Houston. During the lunar mission they’ll want to move quickly, says Andrews, because their time on the moon may be short—potentially as little as a week or so, depending on the landing site. The rover will be driven in near-real time (allowing for the signal delay) at “almost a human-like cadence”; when the rover isn’t actively surveying, it will reach a top speed of nearly 1 mph. This is very different from NASA’s Mars rovers, which receive only one command a day, followed by lots of waiting, analysis, and planning of the next day’s course.

The faster pace is possible because the signal delay at the moon is a mere 2.6 seconds. That amount of latency is acceptable, says Lester, “if all you’re doing is driving around.” But when future missions start adding force feedback and other haptic (touch) capabilities similar to those Doc Ricketts has on the ocean floor, latency becomes a problem. It “screws with your brain,” Lester says. On a NEEMO (NASA Extreme Environment Mission Operations) training expedition, astronauts living in an underwater habitat off of Florida experimented with habitat-to-shore telerobotics, and found that with the 2.6-second Earth-moon latency, tying a shoelace remotely took almost 10 minutes. Without a delay, it took just a minute.

Strictly speaking, the driving mode for Resource Prospector should be called tele-operation. Lester reserves the term “telepresence” for true, real-time operation, with no perceptible delay between your joystick command or exoskeleton-clad arm motion and the movement of the robot arm.

NASA doesn’t have a lot of experience with what it calls low-latency tele-operations, or LLT, and there’s some disagreement on exactly how low a latency you need to achieve a true feeling of presence. Most people settle on something around the human eye-hand reaction time, which is 200 milliseconds, or one-fifth of a second. A recent study of the effects of latency on robotic surgery, conducted for the Department of Defense by Florida Hospital’s Nicholson Center, found that when lag times are under 200 milliseconds, surgeons can tele-operate safely. The researchers also measured communication delays between different cities, and found latencies under 200 milliseconds for places as widely separated as Orlando, Florida, and Forth Worth, Texas, which are 1,200 miles apart. They concluded that “telesurgery is possible and generally safe for large areas within the United States. Limitations are no longer due to lag time but rather factors associated with reliability, social acceptance, insurance and legal liability.”

Lester would like to see the same insight applied to space exploration. Assuming reliable, fault-tolerant communications and using a latency of 200 milliseconds as a benchmark, he has calculated how far an operator can be from a planetary robot and still feel true telepresence: 30,000 kilometers, or 18,600 miles. Astronauts in a high orbit around the moon (where NASA envisions putting a habitable outpost in the 2020s) would be within that “cognitive horizon” when operating robots on the lunar surface. So would crews in Martian orbit, doing work on Mars.

The basic technology for such missions is already in hand, although questions remain about how it would work in practice. For example, NASA has done experiments proving that station astronauts can easily direct robots on Earth to deploy thin film antennas of the kind needed to construct future radio telescopes on the lunar surface. Would astronauts in lunar orbit have the same sensation driving a rover in the one-sixth gravity of the moon?

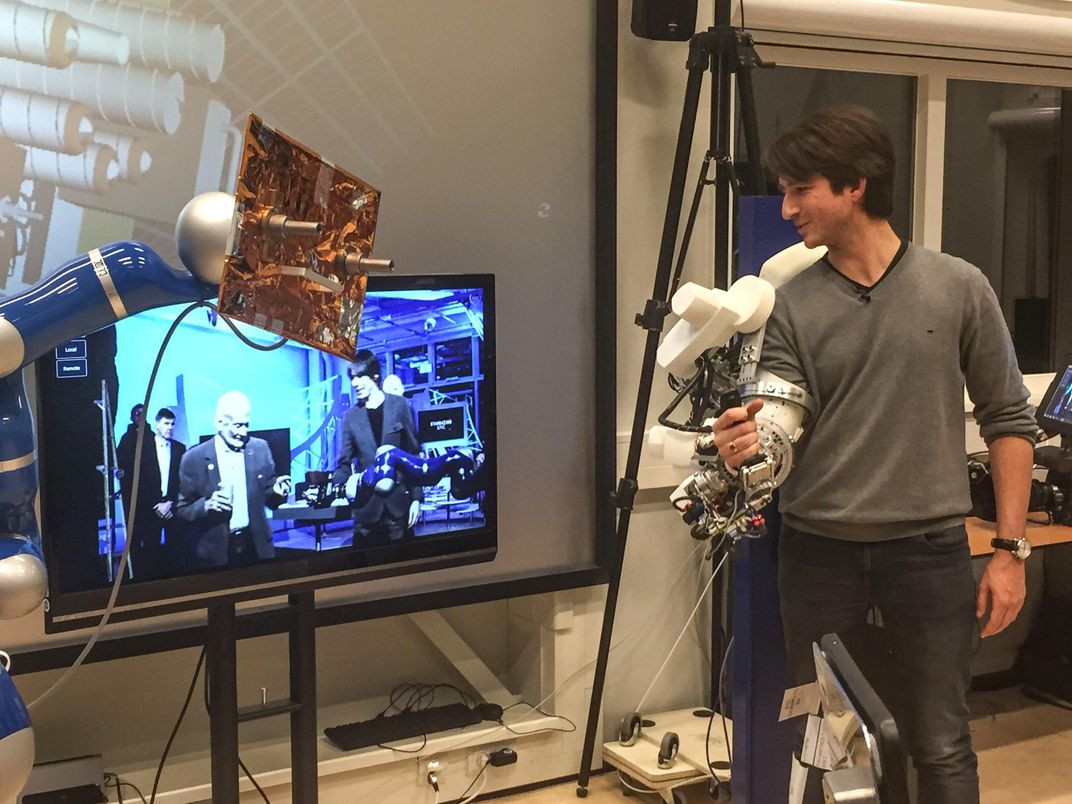

The European Space Agency has shown a strong interest in tele-operations on the moon, and currently is leading an international study called HERACLES (Human-Enhanced Architecture and Capability for Lunar Exploration and Science). A promotional video for the project includes a scene at ESA’s Telerobotics and Haptics Laboratory in Noordwijk, the Netherlands, with a title screen reading “People no longer have to be in space to work there.”

André Schiele, the lab’s principal investigator, has run several experiments with astronauts on the space station to measure their reaction times and coordination when operating devices on Earth, using both visual and haptic inputs, as well as different amounts of latency. Last September, Danish astronaut Andy Mogenson conducted the most advanced test yet on the station, successfully directing a robot on Earth to roll over to a target task board and insert a metal pin securely in a round hole, which demanded a precision of less than 0.2 millimeter. The test went far better than expected. Despite the fact that Mogenson had never trained for it, had never seen the robot, and (due to problems with communications relays) experienced latencies of up to 12 seconds instead of the planned 850 milliseconds, he was still able to use both visual and force feedback cues and insert the pin.

These are simple demonstrations, but they give confidence that 10 or 15 years from now, astronauts could work efficiently on the moon from lunar orbit (within Lester’s cognitive horizon). “We’re talking about people in a habitat, putting their presence down on the surface, and having an immersive experience where, for all intents and purposes, they are there,” Lester says.

Meanwhile, virtual reality is becoming, well, real, and that could let more people share in the experience of space exploration. Space and VR have always been a good match. For 20 years, a small team at Johnson’s VRLab has been training astronauts with ever more faithful simulations of the spacecraft and payloads they handle in space. Each year the software and hardware get better, the graphics more lifelike. Now the outside world is catching up. A new generation of high-quality VR headsets will soon be introduced for the consumer market, and many tech-watchers believe these and other immersive devices will transform culture in the same way that touch-screen smartphones did after they appeared in 2007. (Some 200,000 developers have registered to create apps and games for the Oculus Rift, a headset that will arrive in stores next year.) The fact that the commercial market is now driving the push toward high-quality VR is “fantastic,” says Evelyn Miralles, the Lead VR Innovator/Principal Engineer for NASA’s VRLab. Her team plans to send an Oculus headset up to the space station for evaluation next year.

Where will these seemingly disparate technical developments, which aren’t fully mature, let alone part of a coherent strategy, lead? Picture this: It’s 2040, and dozens of rovers explore the Martian surface. Using high-speed optical data links, they send back floods of 3D imagery and any other data they’ve scanned, so citizens and scientists can experience Mars in whatever immersive media are then current. This type of space exploration doesn’t dead-end at Mars, as the familiar boots-on-the-ground approach does. Nobody envisions astronauts landing on the 865-degree surface of Venus or –391-degree Pluto. A telepresence robot conceivably could.

For those who think this sounds hollow and bloodless, a timid turning away from human exploration, consider this: Unless we bio-engineer our bodies, or spend centuries terraforming other planets, we will never experience these places directly. We’ll never feel a Martian breeze on our faces or swim in a lake on Titan. We’ll always be looking out from inside helmets and spacesuits, at least one step removed from the raw experience. It’s only a matter of how removed.